Moving data from places like AWS S3 into a MongoDB is super easy with Meltano. It just requires three simple steps to get it going.

Many companies use MongoDB as a central document database to make documents readily available and queryable. AWS S3 is one of the most popular object stores and can host a variety of files. By moving the files into MongoDB you get additional options like fast querying of these files in collection with others. And this is what we’re going to demo in this walk-through you can follow along with.

In this tutorial we will move several files from AWS S3 into MongoDB and explain all your options along the way.

In order to follow along with the steps given in this tutorial you will need:

- Python 3.6-3.8 (make sure to match this, otherwise the target might throw an error)

- Any kind of Meltano installation (docker, pip or pipx) and a Meltano project

- Your AWS S3 credentials ready including 1-2 CSV files in a bucket

- Your MongoDB database up and running together with its connection string (e.g. mongodb://localhost:27017 if you have a local one running)

Use Meltano to move data from AWS to MongoDB

Moving data from AWS to MongoDB is a classic task for Meltano.

If you’re moving data to MongoDB it is usually because you want to unify multiple sources into one document database. And even though mongoDB has good support for integrations, hand-coding and maintaining multiple integrations for each source separately is a complexity most companies are not willing to take on. With Meltano you get hundreds of sources available by default, plus an extension mechanism that is easy to use should you need it.

Getting files from AWS S3 to MongoDB is a simple three step process with Meltano; let’s get started!

Install a MongoDB target and a AWS S3 tap into your Meltano project

The first step is to install two plugins:

- an extractor to source data from AWS S3

- and a loader to load data into MongoDB to your Meltano project.

For AWS S3 we will use the “pipelinewise-tap-s3-csv” extractor. You can add it to your project by running

meltano add extractor tap-s3-csvTo add the mongoDB loader, you just have to run

meltano add loader target-monogdbThis will add entries inside the meltano.yml file and add the plugins to your project. Next we set up the AWS S3 extraction.

Configure the AWS S3 tap to load your CSVs

To configure the AWS S3 tap, provide

- bucket: your bucket name

- start_date: A starting date the loader can use to load files beginning from this date.

You can do so by using the meltano CLI:

meltano config tap-s3-csv set bucket test

meltano config tap-s3-csv set start_date 2000-01-01This will also add these configurations into your meltano.yml file. Next open the meltano.yml file to specify the CSV file you want to retrieve from AWS S3. Our example is shown below.

To provide your AWS credentials, you will likely have them exported into environment variables anyways. If that’s the case, you don’t even need to specify them, the tap will load them by default. If you don’t have them exported, you can write them into the .env file inside the meltano project, which again should load them into the plugin automatically.

If you happen to use non-profile based authentication using temporary access tokens, you can follow the tap docs for setting these as well.

Notice this loader is made to look for arbitrary collections of files, so these settings might look like overkill but they are very useful once you start to load CSVs in large amounts.

Next, set up the mongoDB loader.

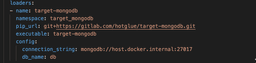

Configure the MongoDB target to receive your data

To configure the MongoDB target, again open up the meltano.yml file and edit the entries under “loader: -> target-mongodb ->config”. You only need to provide two settings

- connection_string: your mongoDB connection string in the usual format (mongodb://[${MONGO_USERNAME:${MONGO_PASSWORD}@] mongodb0.example.com:27017)

- db_name: the name of your target database. By default mongoDB databases will be named “db”.

You can again use the meltano CLI to provide these two settings:

meltano config target-mongodb set connection_string mongodb://host.docker.internal:27017

meltano config target-mongodb set db_name dbMake sure to set the two environment variables $MONGO_USERNAME and $MONGO_PASSWORD before running the pipeline. You can do so for instance by exporting them in bash or by writing them into the .env file inside the meltano project.

Inside a mongoDB you have separate databases (our local one in the pic above just has one, and the database name is “db”), and within each database separate collections of documents. Notice, there is no “collection” you specify. These are managed behind the scenes by the stream names. In this case, the stream name is the table name you provided inside the AWS S3 source. Next, we load the data!

Do a Meltano run to load data from AWS S3 into MongoDB

Running meltano is as easy as one CLI command:

meltano run tap-s3-csv target-mongodbwill do the job.

Check inside your mongoDB to see the collection created:

Next, to see how our loader handles updates, we will modify our source to load a second file AND update contents in our one CSV file.

Modify and add another CSV, then run the import again

Go ahead and modify your first CSV, anything in the content will do the trick.

Then we add a second file to import into a separate collection by adding a second “table” to the tap-s3-csv extractor:

Note, we could add them together into one “table” e.g. by using a search pattern like this: raw_customers.csv|raw_things.csv If we would do so, these documents would be loaded into one collection inside mongoDB.

Run the import again:

meltano run tap-s3-csv target-mongodband check out the results:

inside our documents for the first loaded collection, you will notice that our one updated record was updated as expected. The second collection “raw_things” got synced into mongoDB as well.

Next Steps & Wrapping Up

Congratulations, you’ve run an extract and load process manually. What’s next? Of course to create a job and schedule it to run regularly. We’ve got great docs on doing this, be sure to check them out next.

In this tutorial you learned:

- why Meltano is a great tool to move data from AWS S3 to mongoDB

- how to setup AWS S3 extractors and MongoDB loaders

- how to move data from AWS S3 to MongoDB

- how much fun & ease moving data with Meltano is.

If you enjoyed this tutorial, take a look at the documentation, it contains a great walkthrough of Meltano as well as additional tutorials.