ELT is becoming the default choice for data architectures and yet, many best practices focus primarily on “T”: the transformations.

But the extract and load phase is where data quality is determined for transformation and beyond. As the saying goes, “garbage in, garbage out.”

Robust EL pipelines provide the foundation for delivering accurate, timely, and error-free data.

Luckily we have a community full of data experts that have worked with Meltano, Stitch, Airbyte, Fivetran, and all the big extract and load tools on the market. So we asked them to provide their most important extract and load practices!

We distilled 7 data practices used & loved by the community that will drive up quality for all your data sets, no matter what tool you use.

But wait, why aren’t these “best practices”? Because we consider them pick and choose. If you’re tackling a new project, or don’t yet have many practices inside your extract and load processes, you implement all of them. If you do already have some, complement them with the ones that make sense in your situation.

Setting the Stage: We need E&L practices, because “copying raw data” is more complex than it sounds.

The idea of ELT as a pattern sounds easy, “just copy over the source data first and then run your transformations over the raw data inside your own space”. However, both the word “copy” as well as “raw data” are two words with hidden obstacles.

“Copying” sounds easy. But source data changes, and unless you know what changed, “copying” turns out to be more complicated than you think. Imagine a production table of 150 million “orders” that does come with a “timestamp” but not with a “modified” data. And yes, these exist, all over the place. How do you know that orders got modified, and if so, which ones? For instance, how would you know which orders got “canceled”, an operation that usually takes place in the same data record and just “modifies” it in place.

“Raw data” sounds clear. And yet the idea of extracting & loading implicitly means that usually, you copy between two different technical systems A and B, where you need to adjust the data to match system, B. You ingest from REST APIs and put it into Snowflake, or from an Oracle database into Redshift. Every time you change systems, you will need to modify the “raw data” to adhere to the rules of the new system. You need to do type casting, you need to think about whether you want to “flatten JSONs”, or whether you want to add additional metadata to your data.

Simply “copying raw data” will bring up new questions every time you add a new data source or data target to your list. Even if it is just a new table from the same production database you’ve always been copying from.

These practices will be your guide whenever you take on a new source for ingesting data into your data system.

1. Make each EL run uniquely identifiable – timestamp everything.

We start with arguably the most important best practice: Make every bit of data you load into your data system identifiable and traceable back to the process that got it there.

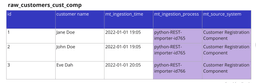

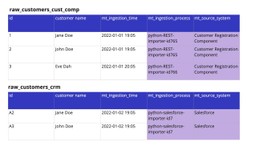

Typical ways of doing this is to include metadata values that capture:

- Ingestion time: the timestamp indicating when the load process started.

- Ingestion process: a unique identifier representing the load process and its instance.

- Source system: Metadata about where the data was extracted from.

Add any or all of these metadata to each row/entry of the data you ingest. We recommend you use the starting time of your ingestor as the “ingestion time” as it simplifies the process. The “identifier of your ingestion instance” should be clear. Don’t just provide the “Airflow-OracleDB-Ingester” as a process, but the “Airflow-OracleDB-Ingester-ID1244” where ID1244 clearly identifies the specific run of ingestion.

One nice benefit of having the source system as metadata is that you’re able to quickly debug problems in downstream dashboards and identify its source. This is also useful metadata for other use cases.

If you have a legacy and a new customer registration component, you can provide the source as a filter option inside dashboards, allowing users to filter for customers from just one system.

2. Deduplicate data at a level beyond the raw level.

There are usually three cases of duplicate data hitting your data systems you will want to “deduplicate”. But no matter the case, don’t do it at the raw/landing level!

The first case is intentional duplicate data, where a source system contains something your end-users or you consider to be duplicates. For instance your CRM system might have two entries for a certain customer that canceled and signed up again. If you deduplicate at the raw level, this means either merging the two or deleting one. Both of which will delete data that is present in the source system.

The second case is unintentional duplicate data, where the source system either deleted a record you still have in your data warehouse, or the source system unintentionally produces duplicate data it will likely delete in the future. Even though this is an “error”, I don’t recommend deleting this data in your raw ingestion area but rather filter it further down the line, for instance in the next stage of your modeling. Otherwise you add logic to your ingestion that is hard to follow up on later.

The third case is duplication happening due to technical restrictions. It might be the case that your ingestion tooling prefers a “at least once delivery” strategy or it might even be a bug in an ingestion process. With “at least once delivery” incremental load strategies, you’re ensuring to get all data rows, but might duplicate some. Again we recommend to keep the duplicate data at the raw level, and filter it down at a later level.

No matter the case, don’t deduplicate on load. Load everything, and keep it there as it is. Do your deduplication later at the next stage.

3. Don’t flatten during EL, do it one stage later.

A lot of the source systems you ingest will return arrays, JSONs, or other nested objects with some kind of hierarchy you will want to break down for further processing. But not at the ingestion level. Take the raw data and dump it as it is in your data system, then have a process do your “flattening”.

A very typical example are JSON objects. Your source might contain a large JSON object and you would like to have it processed into individual columns inside your Snowflake database. This practice suggests to first have a raw table with just your metadata columns and one column “JSON_blob” containing the JSON object. In a secondary step you can then process this data into columns.

The reason for this is that flattening involves business logic. It involves you knowing what properties are “always there”. If you flatten on ingestion, your ingestion process might break because one JSON object is NULL, or one JSON object doesn’t come with one expected value. It is always easier to take the already ingested data and rerun your flattener than it is to run your ingestion + flattening process together.

Additional tip: The same practice leads to avoiding type casting (if at all possible) on ingestion. We recommend doing typecasting after ingestion.

4. Have an immutable raw level.

At some point, you’re going to switch to incremental updates on your data. It’s the time to really keep in mind to have an “immutable raw level” an area where you never, I repeat never, modify or delete data.

Not deduplicating is one part of it (see rule 2), but there is more: In your immutable raw level, you do not remove records removed upstream, you don’t modify data modified upstream. You’re simply loading new data in, that is it.

A great example I still painfully remember is a north star metric dashboard. It showed the current development of the north star metric I worked at, based on customer behavior over the last couple of months. The dashboard and the numbers looked great, moving upwards. Product & management decisions were made on this basis. New records of the north star metric were broadcasted each week.

Then suddenly, one day, the dashboard looked different. Our north star metric shaved 10% of its value, and 30% in a specific segment.

Turns out, one huge customer left, and the records got completely wiped in the regarding system.

Since we were modifying our raw data, we completely messed up the north star metric irreversibly.

From that day on, we used snapshots on all the raw data that might be subject to change.

Do yourself a favor, and have your raw level immutable.

Note: immutable staging areas are effectively what you create with full table syncs if you do not delete data. Also for GDPR and privacy concerns, you might need to make an exception here.

5. Do not transform data on ingestion, not even slightly unless you have to.

There are good reasons to transform data on the fly on ingestion, but almost every case you will think of, also works without. There are two good reasons for transforming data on the fly: legal and security. For all other reasons, you should try to first ingest data, and then run a small transformation on the ingested data.

If you do opt to do a “on the fly” transformation while ingesting data, make sure you make it fail proof. Try to only add data or subtract data, not modify it.

By default, if you do want to do transformations, it is always better to either create a mapping table or ingest a mapping table and do the join there.

You can do so by using mechanisms like “dbt seeds” or ingest Google Sheets maintained by an external contributor.

These practices will ensure your pipelines are robust and thus serve high quality and recent data.

What about you?

What is your practice that saves considerable time on extract and load practices? With which practice do you disagree? Reach out and talk to us! We mean it, we’re available on Linkedin, Twitter and of course in our great Slack community, the place most of these great insights came from!