The modern world is driven by data, and businesses face an ever-growing need for better ways to understand and explore the data they generate. Unfortunately, data rarely arrives in a neatly organized, easily understood form. Rather, it needs to be extracted from the system, then converted to a dataset that’s more useful for analysis. Although it’s possible to manually write code to query and refactor your data for analysis, there are readily available tools on the market that make the job a lot easier, allowing you to simply connect to your source data and bring it into your analytical systems.

Extract, Load, and Transform (or ELT) tools aid in moving data from a source system to a target system. Both Fivetran and Meltano are popular ELT tools and offer a wide range of features. Meltano is open source and lets you use the familiar command line interface to write your ELT program, whereas most other tools, including Fivetran, are drag-and-drop-based. In this article, we will focus on Fivetran and Meltano, learning more about the differences between these tools—and why migrating your existing pipelines to Meltano can be a good choice.

Comparing Fivetran and Meltano

In the following section, you’ll get a brief overview of Fivetran and Meltano.

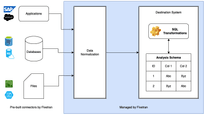

Fivetran is a web-based ELT platform designed with an analyst-first approach. This means there is no code involved in building the pipelines, and its utilization relies instead on drag and drop tools. It’s available in the cloud, and offers a pricing model based on the consumption of data.

Fivetran offers multiple pre-built connectors that you can use to connect to your source data. These connectors are defined by Fivetran, so there’s no manual coding involved in it. You can simply add the connector to your pipeline and configure it with your data source. Once connected to the source system, Fivetran extracts the information and loads it into a target data source of your choice.

Meltano, on the other hand, is an open-source, self-hosted ELT tool. Meltano is extensible and offers a CLI-first approach that allows developers to use the command line interface to build data pipelines. Since Meltano is open source, you can easily integrate with other open-source tools, such as Apache Airflow, for orchestration. You can install and run Meltano on your local machine, in the cloud, or using Docker.

A Brief Comparison Between Fivetran and Meltano

| Meltano | Fivetran |

| Meltano is based on Singer taps and targets. You can easily develop your own custom taps and targets. | Fivetran offers pre-built connectors that can be used to connect to your source data systems. This makes data integration quite fast. |

| Meltano is open source, which means there is no cost involved to run it locally. | Fivetran is a proprietary tool and has a dynamic pricing model. It charges customers based on the number of monthly active rows, charging more when more data is processed. |

| Meltano uses a code-based approach that allows developers to add extractors and loaders as required. | Fivetran’s interface is a GUI that allows users to set up their sources and targets. |

| Since Meltano is code-based, you can easily integrate it with Git and other version control systems. You can add all your extractors, loaders, and transformations to the version control. | Fivetran does not support version control of your data pipelines on the flow. However, you can use a third-party tool like dbt to version control your transformations. |

| Meltano leverages the open-source Apache Airflow library to orchestrate and schedule data pipelines. | Fivetran has a built-in scheduling option, allowing you to schedule when your pipelines should run. |

Though it’s mentioned above, cost is a primary concern for many organizations, and is worth discussing in more detail. Fivetran can be quite costly to use, and you need to pay for each of the data sources and destinations that you use within your ELT pipeline. Their dynamic pricing model can make budgeting difficult, and some users will find themselves faced with having to pick and choose which data sources they can afford to use instead of which ones are best for their purposes. Because Meltano is an open source platform, it avoids this problem completely. It uses Singer taps and targets for setting up the data sources and destinations, and you can use as many as you need, or even develop your own data connectors, without having to worry about how it’s going to affect your bottom line.

Meltano as an Open-Source ELT Tool

As an open-source data integration tool, Meltano provides agility and flexibility while developing your data pipelines. Instead of importing a default set of multiple connectors you don’t need, you can create modular structures of code by using only the extractors and loaders that are required by your system. Meltano is supported by a thriving community of data and software engineers who contribute towards the development of plug-ins and other tools, resulting in faster shipping of features. Finally, because Meltano is open source, you have complete authority and control over how it is deployed and managed within your organization, which makes it one of the most customizable ELT frameworks in the modern data world.

Meltano’s features such as CLI-first design and source control with Git make it stand out among data integration tools. Additionally, moving to Meltano can be a huge savings on data processing costs.

Planning a Phased Migration from Fivetran to Meltano

While building and managing data applications are resource-intensive tasks, migrating your data pipelines doesn’t have to be. Planning your migration strategy is one of the key factors in ensuring a smooth transition from your existing system to a new one. When migrating from Fivetran to Meltano, there are several important steps that you’ll want to consider ahead of time.

- Analyze your existing data sources and targets. In this step, you need to take into account all the data sources that you would want to migrate, and create an inventory of the sources and targets, as well as of all the tables and columns with additional requirements, if any. This step is crucial to ensure that you don’t miss any of your data sources in the migration.

- Get an idea of the transformations involved. You should consider making a source-to-target mapping document that tells you how the data is transformed along the way to the destination. Fivetran is an ELT application, which means it will allow you to extract and load your data to your target systems, then transform the data on the target. If there are any transformations already in place, you should make note of them so that after migration you can apply them in Meltano using dbt. At the time of writing, Fivetran integration with dbt is in beta. It’s worth considering migrating your Fivetran transformations to dbt, then migrating the entire pipeline to Meltano. This step will allow you to replicate the existing transformations smoothly within Meltano.

- Ensure a proper data validation system is in place. After the pipeline migration has been completed and the new system is in place, it’s time to start moving the data from the source system to the target using Meltano. When you do this, you should be sure to validate the migrated data. Some tools have built-in options for data integrity checks—for example, dbt ensures data integrity with checks such as unique, not null, etc. You could also validate your data using something like Great Expectations, which expedites the validation process by profiling your data and auto-generating assertions to test against. After testing, it generates an easily readable data quality report. Validation techniques like these will ensure you have correct data in place after the migration has been completed.

- Plan for maintenance and security. Once you know that the data has been migrated and validated successfully, security measures can be added as a part of initial routine maintenance. You can consider creating various access control roles within your new data target and define what roles have access to which content within the database. Another way of implementing security within your database is by masking your sensitive columns. This will ensure that data is always safe within your database, so there’s less chances of data compromise.

In the next section, you will learn how to get started with Meltano on your local machine and run a simple ELT program.

Installing Meltano on your local machine

In order to run Meltano on your local machine, you need to have Python 3.7 or later installed. If you already have Python installed, you can check the version by using the following command.

python3 --versionOnce you know your Python version is sufficiently recent, you can create a virtual environment using Python and activate it using the commands as follows.

python3 -m venv .venv

source .venv/bin/activate

Now that the virtual environment has been created, you can install Meltano using the Python package manager, pip, and check if the installation has been completed successfully.

pip install meltano

meltano --version

With Meltano installed on your machine, the next step would be to create or initiate a project, which can be done using the following command. You need to provide a name for your project, and a directory will be created to contain the skeleton of the Meltano project.

meltano init migration-fivetran-meltano-project

Once Meltano completes the initiation of your project, you can view all the files and folders that have been created for you. All the configurations of your project will be stored in the Meltano.yml file. Now you’re ready to get started with Meltano.

Configuring Meltano Extractors, Loaders, and Transformations

Once your Meltano project has been created, it’s time to configure your extractors, loaders, and transformations. Extractors are used to connect to the data sources, and loaders are used to write data to destination sources. Meltano supports dbt transformations that you can use in your data pipeline. In the following section, you will look at a simple data pipeline that can be used to extract data from a source CSV file and load it to a JSON file as a target destination.

The CSV file is quite simple, and contains four rows of data, as shown below. Add the CSV file under the extract directory created by Meltano. This is where Meltano will look for the source files when the data pipeline is executed.

Set Up Your Extractors for Data Sources

Now you can add the CSV extractor using the following command on the terminal.

meltano add extractor tap-csvHead over to the Meltano.yml file and add the configurations for your source data, which in this case is CSV. You need to add entity, path, and keys for each of the source files that you want to use in the data pipeline.

plugins:

extractors:

- name: tap-csv

variant: meltanolabs

pip_url: git+https://github.com/MeltanoLabs/tap-csv.git

config:

files:

- entity : source

path : extract/meltano-source.csv

keys : [Id]

Set Up and Configure Your Loaders

Similarly, you can add a Meltano loader to which you can export your data. In this section, you can choose any loader of your choice from the list of available loaders on Meltano Hub. To add a PostgreSQL loader to the Meltano project, you can use the following command.

meltano add loader target-postgresSet Up and Configure Your Transformers

Meltano uses dbt for transformations within your databases, which in turn uses plain SQL to define the transformations. You can add a dbt transformer to your project using the following code.

meltano add transformer dbtTo learn more about transformations using dbt and configurations, please refer to the official guide.

Validate the System

Now that the extractors, loaders, and transformers are included in the project, you can validate the data pipeline by running it. When you run the data pipeline, Meltano will read the data from the CSV file and load it into a table in PostgreSQL. If you apply any transformations using dbt, those transformations will be handled in the same pipeline. To keep things simple, there are no transformations in this example pipeline.

meltano elt tap-csv target-postgres --job_id=csv-to-postgresOnce you run your ELT pipeline using the command above, you can see that the source data has been extracted from the CSV file and loaded into a new table, public.source, on your PostgreSQL database.

You can also design and develop complex data pipelines using multiple layers that help create staging and production layers while applying data transformations across each of those layers.

Conclusion

In this article, you have learned about Meltano and how it compares with Fivetran. Because it’s an open-source data integration tool, Meltano can be adopted and integrated with other popular data tools such as Airflow and dbt. You can migrate your existing workflows from Fivetran and set up similar data pipelines in Meltano at a much lower cost, and with full ownership of your data. If you’re looking to migrate data, apply transformations on the fly, or simply make your pipeline more robust, Meltano’s got you covered.

Guest written by, Aveek Das. Thanks Aveek!