11 Advanced best practices for working with data ingestion pipelines.

Whether you use an automated data movement platform or hand-code all your ingestion processes in Python, nothing truly delivers value to your end users with just a few clicks.

Getting data from many sources into your data warehouse, data lake, or any other system will stay a long-term development process.

So it does sound like using software engineering development best practices is a great idea, right? Not so fast, though. Not everything software engineers do translates well to data engineering, especially data ingestion.

Not everything software engineers do translates well to data engineering, especially data ingestion.

But a couple of essential practices do, and they are powerful, making data ingestion a piece of cake. The practices of

- Keeping code changes small

- and simple

- and pushing the changes into production fast through automation and testing.

We surveyed tons of data engineers to see how they do it! We picked out the highlights in the list below!

These practices apply to all tools, whether you’re on Stitch, Airbyte, Meltano, or plain old Python. So let’s see how you can make your data ingestion development process faster and more safe at the same time.

#1: Be picky on tables; go all-in on columns

What: Ingest all columns for a new table, but don’t ingest additional tables unless necessary. Keep your ingestion code simple. Better go for one less table than one too many.

Why? Let us look at an example!

Example pipeline

Our example data team runs a simple data stack.

- Ingesting most data from the operational Oracle database, “users” and “orders.” tables.

- Running the ingestion via Python.

- Doing some renaming cleaning into a stg_model for both users and orders.

- Then an aggregation into users and users_orders model via dbt again.

The data team ingests the “age” column from the user tables in our example. Does it need it? We have no idea!

Getting the entire table is more straightforward than selecting individual columns.

On the other hand, the team opted not to ingest the “order_shipping_data,” even though it does sound helpful.

Why: Fewer tables and no selection on the column level means your ingestion logic will be inherently simple. More straightforward logic is easier to version and easier to test.

Full tables will also future-proof your work. If tomorrow, the sales manager needs user data aggregated by age groups; our data team doesn’t need to ingest additional data.

A critical insight is that if you had to add a missing column you excluded initially, you must run a complete full refresh of the entire table. Whereas skipping an entire table has no such downside.

Things to remember: (1) Governance and privacy. Don’t let simplicity keep you from dealing with privacy and security. These topics always add complexity, no matter how you implement them.

(2) This advice does NOT apply to REST APIs. For most table-based sources, like most databases or CSV files, filtering columns is more effort.

That’s usually not true for REST APIs. For REST APIs, you should only consider the “future-proofing” aspect. That means you should be greedy on the data side and consider potential use cases that might arise in the next 6 months.

Example for Rest APIs: If you’re hitting the ExchangesratesAPI to get the exchange rates from GBP to USD, CAD, and EUR, you can apply the idea of future-proofing.

What other countries is your company planning to roll into? Into Japan or China soon? Then it might already be reasonable to add YPN and CN as well.

#2: Prefer log-based > full table > key-based replication

What: There are lots of data replication techniques. If possible, opt for a change-log-based replication; if not, try to take a full table copy every time you ingest, replacing your old version. Once the full table no longer scales and log-based replication isn’t available, go for incremental key-based replication.

Example: Our team uses log-based replication for the orders table. That means they don’t have to deal with how changes are made to the orders table.

The software engineering team behind it hasn’t inserted a “modified at” or “created at” column. So it is not possible to do key-based replication.

Why: Log-based replication means you don’t need to deal with update logic. It means your actual change is small and precise; it is just the log entry. That means you get hard deletes for free! You need a lot of work outside of log-based replication to detect hard deletes.

But there’s a huge caveat: Log-based replication is technically challenging.

So you can only use it when available; some tools offer it, and some don’t. We won’t recommend you try to implement it on your own.

How: We can share two excellent articles on the topic of incremental loads:

- Tobiko Data with “Correctly loading incremental data at scale.”

- And Pedram with “Doing Data the Hard Way Part 1: Extracting”

#3: At least once > exactly once > At most once

What: You’re on incremental key-based replication? It turns out there are still three different ways to “deliver.” Prefer to get each row of your source table at least once, over having it exactly once, over at most once.

This needs explaining!

Why: Having an order arrive at most once inside our data warehouse sucks. Because it means we could miss data. We want to have it “exactly once,” right? But turns out that implementing “exactly once” is problematic.

“Exactly once” replication often promises uniqueness where there is none. And that non-transparency can cause serious harm to your downstream data pipelines.

Note: The problem with “exactly once” replication is that unless your source system handles the replication, there’s one way to guarantee it: To run a complete comparison on every single value of your source and target database, then select the rows that differ.

It’s impractical since this is even more complex than just doing a full table copy again.

An exception to the practice: Should you agree with the data source on a straightforward way of signaling the complete changes, e.g., by keeping a (unique!) “deleted_at” and “modified_at” timestamp in each source table, you can implement exactly once based on these guarantees without much hassle.

How: If you use SQL filters to find and select your data, opt for a “>=” instead of a “>” starting with your last incremental key entry. That’s how you implement “at least once” instead of “at most once.”

However, as everyone will point out right away, this will produce duplicates, so let’s talk about duplicates and how to handle them!

#4: Expect duplicates, everywhere

It’s not just “at least once” delivery that produces duplicates in data.

What: Never assume that a table does not contain duplicates. Not your source, raw, staging, or model tables. Always have both mechanisms for deduplication and tests for duplicates.

Examples: Our data team has a deduplication logic inside the stg_users and stg_orders models. And on top of this, it has a test for uniqueness on the aggregated tables.

Why: There are a ton of reasons why duplicates appear. Some popular ones are…

- You often end up merging data from multiple sources into one model

- You might opt for at least one delivery in your EL choice on some models. Thus you end up with a small set of duplicates automatically

- You’re getting your data from somewhere! Even if the source says something is unique, it might not.

- Replays happen; sometimes, you’re processing duplicate data, especially if you have incremental models running.

How: How to deal with duplicates is dependent on your architecture; some common ways are:

- Allow and have them in your raw data area. Otherwise, you might lose data.

- To deduplicate in the step from raw => stg model

Note: Remember, “distinct count” operations can be costly. So take a second look at duplicate tests, and find a good balance here.

Thanks to Nerstak on Reddit for pointing this out!

#5: Test your assumptions

What: If your data ingestion processes break, it is more often than not because the data source you are ingesting changed its assumed behavior.

Example: Our data team assumes the columns in our user data column will always stay there. It also assumes the “age” column holds a number. That’s what they could build an “age group” model onto.

But one day, a column might be added or dropped. Or the column “age” might suddenly only contain age ranges, not numbers.

These are assumptions our data team has and should test against.

How: There are a lot of ways to test your assumptions. The simplest way is using dbt tests on your raw models.

You can always use custom SQL statements to test assumptions (you can do that with dbt as well btw.!).

Tools like great expectations make this endeavor more serious. Custom SQL or bash scripts will also do the job, and you can leverage data warehouse facilities like indices….

#6: Run complete tests on every change

Example: Imagine our team added another column to the orders table they are ingesting (of course, the ignored advice #1 then!).

What can then happen is that the data transformation breaks – even though no new data is transformed yet. How is that possible? In reality, many things can happen simply because the data coming in is new. In our example, the source team could’ve changed the data type of the ID column, making it a uuid.

What: Run complete tests, including the data transformation tests, even if you change one tiny detail on the data ingestion and do not touch the transformation. Software engineers call this an integration test.

How: Chain up a dbt test run after each pipeline test you have already set up.

#7 Create a prod-like environment

What: Have a production-like environment ready, and utilize it for your final QA of any change.

Example: Test_raw; test_stg test_model schemas inside the snowflake account. Dev can trigger a complete run and get all the data into these.

Why: No one wants to “click-and-cross-fingers” whenever a new change finally makes it into production. You should be able to feel confident nothing will break, not today, not tomorrow.

#8 Utilize a data dev environment

What: A development environment is your workshop as a data engineer. It should be a place you can walk into (simple to access), with everything organized to speed up your work (optimized for your workflow).

Why: It should be simple to access because new people are joining your team. Because your team is task-switching, you might need to switch laptops, and setup is always a huge pain.

How:

A bunch of common ways teams set this up is:

- Having fixed test data, possibly held inside CSV files, can then be used by dbt seed to run models as tests.

- A simple shell script that creates a “user234_schema” with a sample of the production data.

- Have a utility function to run ingestion into a local Postgres limited to data from “1 hour ago”.

- Zero-copy clones and similar features are becoming common in the leading data warehouses.

Also, have a look at SQLMesh, it’s a dbt alternative specifically developed to address these kinds of challenges, to make change management simpler. We recommend their quick start guide first.

#9 Version everything

What: Keep everything in version control. Scripts you ran once to do a replay, your infrastructure, your connectors, python versions, dependencies. Use docker if possible or venvs.

Why: Because the trouble with a bug starts when you do not know where to look.

How: Depending on your setups, you must be creative here.

But even if you run a legacy ETL tool, you can still version almost everything. For instance, Pentaho Data Integration allows you to export the “repository.” It also has a dockerized version.

Other SaaS tools offer APIs you can leverage to copy most of your configuration.

#10: Have a 5 minute dev setup

A confession: I don’t have an IDE set up. I only code once or twice a week, so I don’t primarily need it.

But when I need it, I’m super fast because I got this:

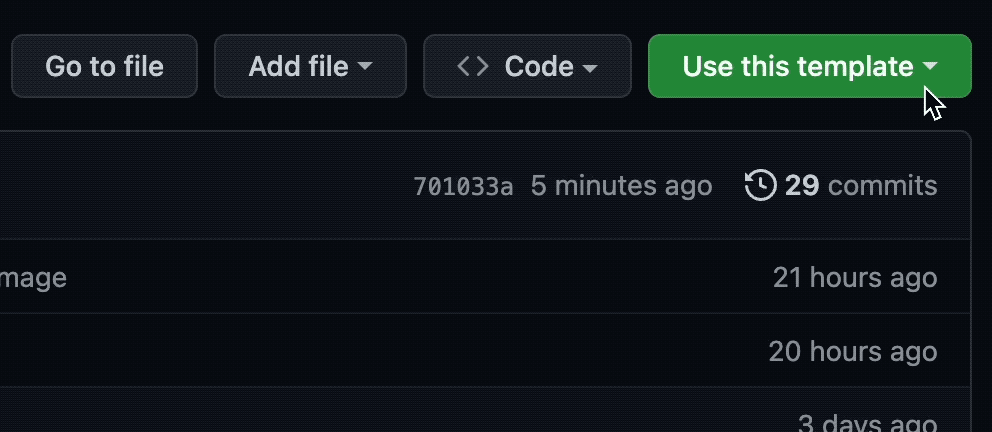

For me launching a new meltano project with linting, testing and an IDE is a easy as this:

That’s what a developer setup can look like. And yours should be just as simple.

Example: Our data team uses GitHub codespaces with a docker container. It contains all linters, test scripts, and database connections a data engineer needs.

What: Anyone who touches a new data engineering repository/workflow should be able to make and test their changes within 30 minutes.

How: You can utilize a bunch of techs to make this work however you want. Examples are:

- Venvs

- Docker

- Codespaces

- Make or basic shell scripting

- Pre-commit hooks

Note: I also use GitHub secrets to preload important stuff into my setup, like our snowflake connection.

#11. Run everything through a CI CD system

We’re biased because CI/CD systems work so well for us!

What: CI/CD systems like GitHub Actions and GitLab CI are all about automation. They ensure your work is reproducible and take cumbersome tasks off your hands.

Why: Because you’re not alone. Why let humans forget things a machine can automate?

How: GitLab and GitHub Actions will do the job for you, almost for free! All you need to learn is a bit of YAML config.

Examples:

At Meltano, we’re heavy user of CI/CD systems. We can outline a few examples of what we do with them:

- Regular (so-called scheduled) jobs check whether our ingestion processes remain intact.

- Linting all YAML, SQL and markdown. Not just testing for correctness but also correcting problems.

- Checking the titles of our PRs to collaborate better (semantic PR titles are super helpful!)

- Running our newly developed pipelines in an integration test environment before promoting them into production.

- Giving us the ability to roll back a broken deployment to the previous state.

Needless to say, we love CI/CD systems inside our data ingestion processes.

If you came all this way, be sure also to read the first part of this series, “5 Helpful Extract & Load Practices for High-Quality Raw Data – Immutable raw areas, no transformations, no flattening, and no dedups before finishing your excavations”.