Data is Transforming the Enterprise

Guest post written by Ethan Batraski, Partner at Venrock.

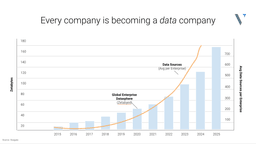

The amount of data being generated and stored has exploded in scale and volume. Every aspect of the enterprise is being instrumented for data, with the goal of unlocking new capabilities and building an edge. This data is beginning to rapidly transform every industry.

This transformation started with the realization that data can operationally drive and power every aspect of an enterprise, rather than just analytics. Data is allowing enterprises to respond faster to changes in their businesses unlike ever before, and the volume of that data is increasing exponentially. Even as we navigate uncharted inflation, COVID uncertainty, a strained supply chain, quantitative tightening, and global unrest, data allows enterprises to drive down costs, unlock new capabilities, and maintain competitiveness.

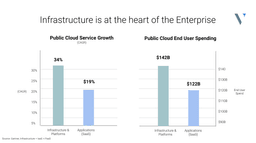

As we’ve seen, major dislocations come as a result of new mediums or computing platforms, from mainframes to servers, to virtualization to the cloud. We are now entering the cloud data era, with the infrastructure at the heart of it all.

The Cloud Data Infrastructure Era

The last two years of remote work have been a tailwind for digital transformation across every sector. Every CIO and CTO is being asked about unlocking data as a competitive advantage to increase decision velocity and quality. As a result, modernizing the data platform has become a top priority. In layman’s terms, this means building a set of capabilities to move data from data generation (logs, orders, events, etc.) through processing to applications or services as quickly as possible.

Companies such as Astronomer with Apache Airflow and Decodable with Apache Flink are key services in the new data stack, with more services emerging to handle data ingestion, transformation, data quality, data processing, cataloging data and tables, managing schemas, and testing—all required to build a stable and performant data stack.

But the way you currently build and manage a data infrastructure looks nothing like building and managing a software application. There are no isolated environments for development and testing, no code reviews, unit testing, or version control. Making updates to your data infrastructure is akin to making edits directly in production, and it often leads to outages, data quality issues, and constant firefighting.

That Was Until We Met Meltano

Meltano spun out of GitLab‘s vision of creating a better way to build software. The company realized that the DevOps best practices adopted by software developers could be easily implemented for data, with the same positive impacts (or results?). GitLab realized that data teams needed to operate much more like software or application teams, which meant using the software development lifecycle (SDLC) principles to enforce high quality, performance, and secure data services and infrastructure.

Meltano started as an open-source project and grew rapidly in popularity with its approach to solving the data ingestion and loading challenges most developers faced. This was the start of introducing software principles for building data services. Meltano grew to over 2000-plus active developers in their Slack community, with 1000-plus organizations and enterprises running hundreds of thousands of active pipelines.

Introducing Meltano 2.0: Data Infrastructure That Will Grow With You

Today, Meltano announces Meltano 2.0, a new way to build and scale up your data infrastructure by introducing an end-to-end control plane for your data services, making it the infrastructure and DevOps for your modern data stack.

Meltano 2.0:

- Introduces a much easier way to deploy and connect various data services that make up your data stack, in an opinionated-based approach

- Applies the SDLC principles to data engineering

- Provides set up in minutes, enabling you to try out new tools, easily replace old ones, and experiment locally

Now, with Meltano, you can spin up a service or tool (Airflow, dbt, Great Expectations, Snowflake, etc) and easily configure, deploy, and manage it through a single control plane. Meltano provisions the services, manages the deployments, turns the configurations into code in Git, and version controls all changes while enabling end-to-end testing and isolated environments for new changes. Installations and deployments now become standardized, and all the complexity of managing the various tools and services gets abstracted into Meltano.

A data team can easily spin up a new data flow with Airflow for orchestration, dbt for transformation, Singer taps for ingestion, Great Expectations for testing, and Superset for analysis—all managed by a single platform. You no longer need to install, deploy, configure, manage, and stitch the services together.

As a real-world example that a lot of us have faced many times over; let’s say you update a schema (change the data representation) for a data pipeline, and that pipeline writes to a table that now needs to store in that new schema. Any dashboard or service that relies on the table will now break. With Meltano, data teams can deploy these changes into isolated development environments and run end-to-end testing of those changes to safely make and verify changes locally before pushing into production. If something breaks into production, you can just roll it back.

Partnering with Meltano

We have long said the way we build data services and infrastructure needed to mirror how we built applications and software: from a single pane of glass, with package managers, consistent workflows, isolated environments, end-to-end testing, and the flexibility to use the best tools for the job. From small and new companies setting up a data stack for the first time to larger enterprises moving off a legacy data stack, the future of the data stack will look a lot more like software than it does today, and we know Meltano will be at the center of it.

Venrock is excited to announce its partnership with Meltano in their Seed along with GV and GitLab in this exciting future.