An isolated test environment is a set of computing resources in the form of hardware, software, and configuration files working together—but set apart from the production environment—to execute test cases and ascertain certain system behavior. It’s deployed on a separate network or computing resources so that applications running in isolation are prevented from accessing applications outside their environment.

These test environments are configured with the same specifications as the production environment to ensure system specifications meet the production system requirements before the system is deployed to live.

This article will introduce you to isolated test environments and their benefits, and show you how to implement an isolated test environment with Meltano and Snowflake.

Why You Need an Isolated Test Environment

It’s hard to predict the behavior of systems using external resources and other dependencies, so isolated test environments are useful to simulate system behavior in unfamiliar run-time environments. It’s also important for test dependencies to be isolated from production system dependencies because faulty dependencies can result in system failure even if the implementation is correct. Testing in isolation ensures that dependencies are working before being shipped to production.

In a system with complex relationships between different system components, for example, inserting a record into a table with complex foreign key relationships, test environments where units of software are in isolation are necessary in order to have accurate results. Additionally, these environments are used to isolate test users so that they don’t interfere with mission-critical systems like mail servers and financial record databases.

Use Cases for Isolated Test Environments

Isolated environments are essential in a variety of DataOps processes, such as the following:

- Bug detection: Bugs are more easily detected when units of software are tested in isolation.

- System security: Testing in isolation exploits security vulnerabilities in a system before deploying to production, which improves overall system security by ensuring no flaw is put to production.

- System productivity: Changes introduced directly to a system in production may cause a breakdown and resulting downtime; to avoid that, systems are tested away from production environments, which helps to increase overall system productivity.

- Computing resource management: By carrying out system tests in an isolated environment, you can avoid interference between different test runs and also avoid conflict in managing and sharing computing resources.

- Package management: When packages are handled by isolated environments, all tests maintain the same dependencies thereby eliminating local dependency problems and making package management a lot easier. When test execution is not in an isolated environment, file changes may affect the host environment.

- Continuous integration (CI): Isolated testing can be incorporated into the CI process (build stage) to ensure code is ready for immediate deployment.

- Completeness: All system behavior under unfamiliar conditions and computing environments can be easily simulated and tested in an isolated environment.

- Accuracy and precision of output: Isolated testing ensures all units of software are checked and any defect is fixed before integrating into the production system. This ensures precise and accurate output is achieved from the overall system as no faulty unit is integrated.

You’ve now explored what isolated test environments are and how they come in handy. Next, we’ll move on to a short guide where you’ll learn how to implement an isolated test environment with Meltano and Snowflake.

Implementing a Snowflake Data Extraction with Meltano

We’ll use a test scenario in which your company wants you to implement an email notification system using email addresses from a customer database in Snowflake. For obvious reasons, you wouldn’t want to carry out this test on the production database.

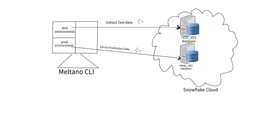

The step-by-step instructions will cover how to create a Meltano project to read and write data from Snowflake databases. You’ll install and configure a Snowflake extractor (tap-snowflake) and a loader (target-snowflake) in your Meltano project. Then, you’ll create and configure two isolated environments, which will respectively read and write data from two Snowflake instances.

As mentioned, implementation requires setting up two isolated environments, a prod environment to read and write data from the PROD database and a test environment to read and write data from the Snowflake TEST database where test user data is stored, as well as example data of co-workers or team members who can receive a test email notification.

The test environment uses the developer’s username to prefix database schema so that each developer pushes only to a schema prefixed with their name.

After a test is run on the isolated test environment, using the production environment will be done by switching to the prod environment.

Prerequisites

You’ll need the following to get started:

- A command line interface or terminal to run Python commands

- A recent version of Python programming language (Python version 3.8.10 was used for this article)

- A recent Python preferred installer program or pip (pip 22.3 was used for this article)

- You may need to install a Python virtual environment (optional but recommended)

- A recent version of Meltano installed on your machine (Meltano version 2.8.0 was used for this article)

- A Snowflake account

- An extractor. tap-snowflake was used for this article

- A loader (target-snowflake was used for this article)

Getting Started with Meltano to Read and Write Data to Snowflake

Start by creating a folder in which all other Meltano files will be hosted. All subsequent commands are to be executed in the folder, i.e after changing into the directory. Create a project directory named isol-test-meltano by executing:

mkdir isol-test-meltanoNow you need to make the directory a valid Meltano project. Initiate the directory you created above, by executing:

meltano init isol-test-meltanoOnce the command is executed, it generates some project folders and files, the important ones are:

- The .meltano folder: This folder indicates that the directory was initialized as a Meltano project.

- meltano.yml: This file defines the tools and configurations used in the project. Anytime a plugin is added or configured, the file is modified to effect the changes. Below is the default meltano.yml file content generated at the initialization of the project:

version: 1

default_environment: dev

project_id: bc911c21-8b29-44dd-8c01-5327ec3ded7b

environments:

- name: dev

- name: staging

- name: prodWhen no environment is activated, Meltano uses the default_environment dev, while the project_id is the unique identifier that differentiates every project. environments lists the names of environments that are used in the project; the three listed above are created by default anytime a project is initiated.

- The Plugins folder: This folder contains all the configuration files for the installed plugins; the plugins can be extractors, loaders, or orchestrators.

Adding a Snowflake Extractor to Your Project

Next, you’ll need to add an extractor (the data source from which the data is extracted) to your project. Here, you’ll install the Snowflake extractor (tap-snowflake) by executing:

meltano add extractor tap-snowflakeAfter the installation, the meltano.yml file will be automatically modified to include the following:

plugins:

extractors:

- name: tap-snowflake

variant: transferwise

pip_url: pipelinewise-tap-snowflakeConfiguring a Meltano Extractor

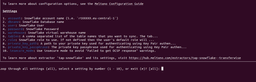

Next, you’ll need to set the necessary configurations to use the tap-snowflake extractor. Examples of such configurations are Snowflake account details, database, tables, data warehouse, etc. Configure the added extractor by executing:

meltano config tap-snowflake set --interactiveIterate each setting from 1-10 as shown below.

Now, you need to set the data replication method to a full table. Configure extractor metadata and select parameters by adding the lines of code below under plugins/extractors in your meltano.yml file:

metadata:

'*':

replication-method: FULL_TABLE

select:

- '*.*'You can check out Meltano documentation for other supported replication methods.

The select mapper in the code is configured to select all. To select only certain columns in your Snowflake database table, for example, email, set the select as follows:

select:

- '*.*.EMAIL'Testing Extractor Configuration

It’s always good to test your configurations before using them. Test your tap-snowflake extractor configuration by running the code below in your terminal (this is optional but recommended):

meltano config tap-snowflake testIf any of your configurations is faulty, the test will throw an error.

Now, you need to install the loader, i.e., the destination where the extracted data is saved, in this case, target-snowflake. Add the target-snowflake loader by executing:

meltano add loader target-snowflakeWhen the loader is installed, the meltano.yml file is automatically updated and the line below is added under plugin:

loaders:

- name: target-snowflakeConfiguring Your Meltano Loader

Next, you’ll need to define configurations for the loader installed (target-snowflake), the configurations are the same configurations used in the extractor (tap-snowflake). Configure the target-snowflake loader by executing:

meltano config target-snowflake set --interactiveTesting Loader Configuration

As an optional step to test if the configuration is done correctly, execute the following:

meltano config target-snowflake testIf your target-snowflake loader isn’t correctly configured, an error will be thrown when you try to test the configuration.

Adding a Transformer

Next, we’ll add a transformer, which is a plugin for running SQL-based transformations on data stored in a warehouse. We’ll be using the dbt-snowflake transformer; you can add this transformer by executing the following:

meltano add transformer dbt-snowflakeThe transformer will be used to create user-specific schema in order to isolate every user development environment from the rest of the team.

Configuring the Transformer

Next, we’ll define Snowflake (transformer) configurations to be used. The configurations are defined the same way the extractors and loaders were configured, i.e. by executing the following:

meltano config dbt-snowflake set –interactiveCreating An Isolated Test Environment

Next, create an isolated test environment named test. This environment will be configured to extract data from the Snowflake TEST, with a schema prefixed with the developer’s username. Create the test environment by executing:

meltano environment add testConfiguring the Test Environment

First, define the configurations to be used under the test environment as follows:

environments:

- name: test

config:

plugins:

extractors:

- name: tap-snowflake

config:

dbname: TEST

user: ${DEVELOPER}

role: ${DEVELOPER}

warehouse: DEV

tables: TEST.${DEVELOPER}_PUBLIC.USERS

account: ${SNOWFLAKE_ACCT}

metadata:

'*':

replication-method: FULL_TABLE

select:

- '*.EMAIL'

loaders:

- name: target-snowflake

config:

dbname: TEST

user: ${DEVELOPER}

role: ${DEVELOPER}

warehouse: DEV

tables: TEST.${DEVELOPER}_PUBLIC.USERS

transformers:

- name: dbt-snowflake

config:

user: ${DEVELOPER}

role: ${DEVELOPER}

warehouse: DEV

database: TEST

target_schema_prefix: ${DEVELOPER}_

account: ${SNOWFLAKE_ACCT}

schema: ${DEVELOPER}_PUBLIC

tables: TEST.${DEVELOPER}_PUBLIC.USERS

env:

DEVELOPER: ...

SNOWFLAKE_ACCT: ...The code above configured the test environment, as follows:

- The environments mapper configured the environments that are used

- The -name specifies the name of the environment whose configurations are defined here in this case test

- The config mapper in the code configured plugins

- plugins configured extractors

- extractors has a tap-snowflake configured with a database TEST and tables TEST.<Developer Username>_PUBLIC.USERS, i.e. the developer’s username is prefixed with the schema name in order to isolate every developer.

- The last important thing is the env, which contains DEVELOPER, i.e. the developer’s name that’s injected all over the configurations. It also contains the Snowflake account in use, which is also injected all over the configurations to avoid repetitions.

You may copy the file from this GitHub repo.

Creating an Isolated Schema

Next, create an isolated schema that isolates the developer from other members of the team. The schema is created with the developer’s name prefixed as follows: <DEVELOPER USERNAME>_PUBLIC.

Create the schema by executing:

meltano invoke dbt-snowflake runConfiguring the Prod Environment

Now you’ll need to define configurations for the prod environment, i.e. set the Snowflake production database and table to extract data from. Without the command below, the prod will use the default tap-snowflake (extractor) configurations.

The default configurations are configurations defined without explicitly stating the environment, i.e. those defined earlier by executing:

meltano --environment=prod config tap-snowflake set --interactiveRunning a Test Using Access to Snowflake

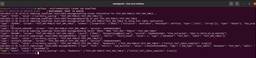

Next, you’ll run a test using access to Snowflake under the test environment without interfering with the prod environment. The –environment=test forces Meltano to use the configuration defined under the test environment. Run the test using the test environment by executing:

meltano --environment=test invoke tap-snowflakeNote that without the –environment=test, Meltano will be forced to use the default tap-snowflake configuration while running the test.

The result of the test run can be found in the image below.

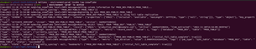

To run a test to extract data from the production database in Snowflake, run the data extraction under the prod environment, by executing:

meltano --environment=prod invoke tap-snowflake–environment=prod forces Meltano to use the configuration defined under the prod environment. After the execution, the content of your Snowflake production database is printed as shown in the image below.

By following the steps above, you’ve successfully run an isolated test using access from Snowflake. An actual test under the test environment printed the test email for the notification while a run under the prod environment printed the production emails as shown in the two pictures above.

Isolated test environment implementation was therefore achieved as each run was carried out using configurations specific to the activated environment but without interaction between environments. A schema prefixed with the developer’s username is used to isolate every developer from other team members.

You can also follow the same steps as above to test other functionalities in isolated environments, e.g. create a new Meltano environment and install different extractors or loaders (like the ones used in this guide).

Conclusion

The article has introduced you to isolated test environments and their benefits, highlighting how they can come in useful for your own DataOps processes. You also learned how to implement an isolated test environment using a Meltano CLI environment and a Snowflake extractor.

The source code for the guide implemented in this article can be found in this GitHub repo.

Thanks to our guest writer Adam Mustapha for this contribution.